Will Gervais just posted a really, really cool simulation showing differences in the number of findings discovered by Dr. Power (who runs 100 person per condition studies, all day everyday) and Dr. Wide Net (who runs 25 person per condition pilot studies and follows up on promising – aka statistically significant – ideas). Both researchers have access to a limited number (4,000) of participants in a given year. The question is, which strategy is better for netting creative new ideas?

Luckily for me, Will shared his code. The code is amazing, and Will is modest. It was easy to modify and add a few pieces to find out a few things I wanted to know. Specifically, Will presents the rate of “findings” (aka true positives) that each approach yields. But what about false positives? Missed effects (aka false negatives)? Correct rejections? Are there any differences for these other findings for Dr. Power vs. Dr. Wide Net? My results are below – as figures instead of tables, sorry Will!

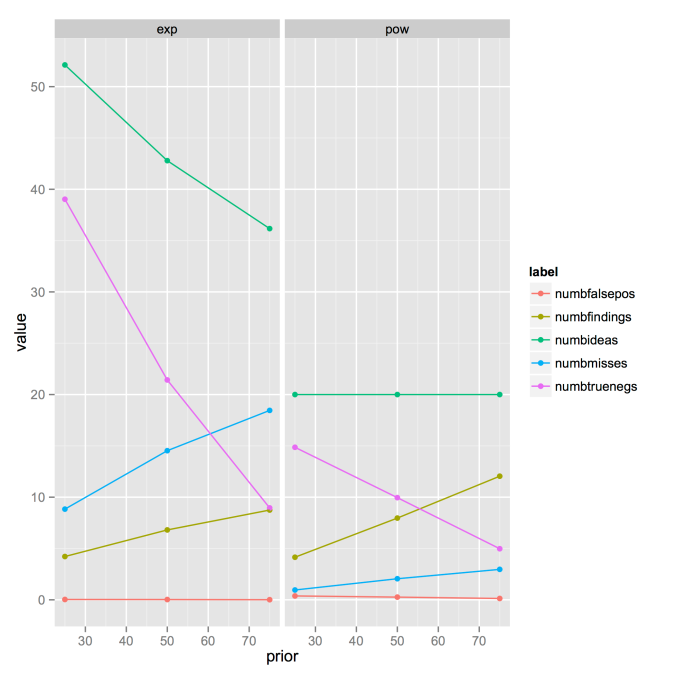

Dr. Power is on the right, and Dr. Wide Net is on the left. I ran the simulation at 3 different prior levels (.25, .50, .75), because I’m even lazier than Will claims to be (he’s obviously not, given this awesome sim). The green line represents the total number of ideas tested (I replicate Will’s finding that for Dr. Wide Net, the number of ideas tested goes down as the prior goes up, whereas for Dr. Power, the number of ideas tested is a direct function of n/cell and total N).

The yellow-y line is the number of true positives (“findings”) identified. Just as Will found, I find that as the prior goes up, Dr. Power finds more findings. (Note that my simulation is done with the alpha for Dr. Wide Net’s pilot studies set at .10, so the same as Will’s Table 2).

The purple line is the number of findings that represent true negatives (i.e., no effect exists, and the test returns non-significant). These go down as the prior goes up, definitionally.

The blue line represents the number of misses – true effects that go undetected. Dr. Wide Net has a ton of these! Dr. Power barely misses out on any effects. This makes sense, because Dr. Wide Net is sacrificing power for the ability to test many ideas. Lower power means that there will be more missed true effects, by definition. (However, for both Drs., misses increase as the prior increases. I don’t actually know why this is. Why should power decrease as the prior increases? Readers?)

Now here’s where it gets really strange. It’s almost imperceptible in the graph above, but the rate of false positives is higher for Dr. Power than it is for Dr. Wide Net. Neither doctor has a particularly high false positive rate, but Dr. Power’s rate is higher. What’s going on? My hunch is that Dr. Wide Net’s filtering of the effects she studies (via pilot testing) is helping to lower the overall false positive rate of her studies.

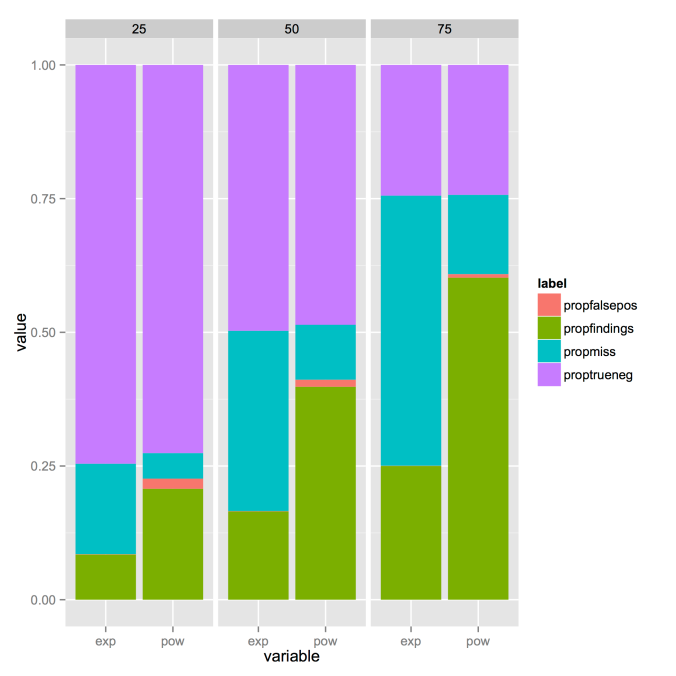

Let’s look at these results another way:

Here we can clearly see that the rate of false positive studies is more perceptible for Dr. Power than Dr. Wide Net (this figure shows the percentage of studies done that yield a particular result). As we know, Dr. Wide Net does way, way more studies.

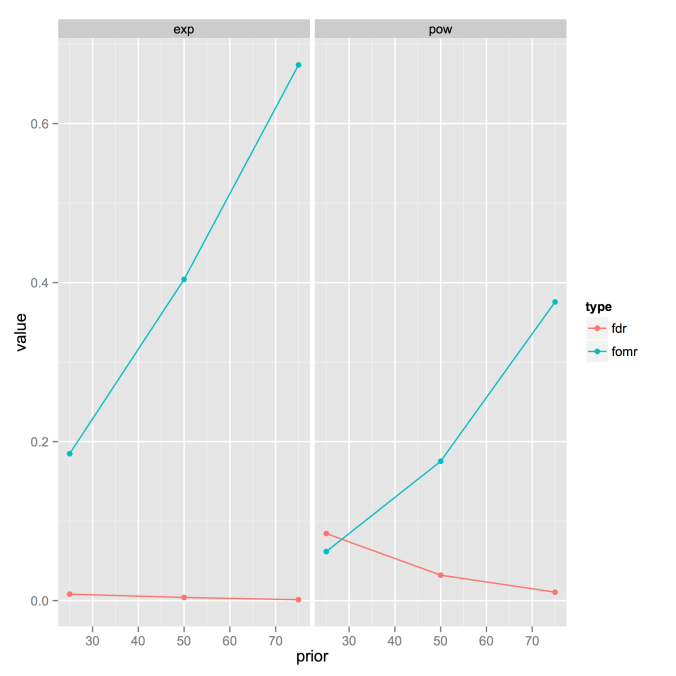

Another way to think about this is as the False Discovery Rate, or the proportion of statistically significant findings that are false positives. We can also consider the False Omission Rate, the proportion of non-significant findings that are missed (false negatives). Here’s a graph:

Dr. Power does have a higher false discovery rate (but the FDR decreases as the prior increases). Dr. Wide Net’s false discovery rate is almost zero. So this is a little weird, because it almost seems like a win for Dr. Wide Net.

BUT – and there’s always a but!

Dr. Wide Net’s False Omission Rate is off the charts. With a 50-50 prior, about 40% of Dr. Wide Net’s non-significant results are actually real effects. By contrast, with the same prior, Dr. Power has only about 18% non-significant results that are actually real effects. When we take this finding into account together with efficiency (again, Dr. Wide Net has to do tons more studies than Dr. Power), I’m pretty sure the lower false discovery rate isn’t worth it.

My code (a slightly modified version of Will’s) is here. I welcome corrections and comments!

I would imagine number of misses go up with prior because an untested (Dr. Power) or un-followed up (Dr. Wide Net) study is more likely to be a Type II error. My hunch would be the same as yours for the false positive issue.

Could you calculate an A’ or d’ from signal detection theory?

I’ve never done an SDT analysis, but it would be interesting. If you try it out, I’d be curious to know how it goes! (And sorry about the lengthy approval time for your comment; the approval request got buried in spam.)