SPSP 2016 has just wrapped up and with it another year of fantastic meetings and discussion. This year, I (together with Jordan Axt, Erica Baranski, and David Condon) hosted a professional development session on daily open science practices – little things you can do each day to make your work more open and reproducible. You can find all of our materials for the session here, but I wanted to elaborate on my portion of the session concerning pre-registration.

A person approached me after the session and told me the following:

“I want to give this pre-registration thing a try, but I don’t know where to start. How can I show an editor that my work is pre-registered?”

So here it is: a how-to guide to pre-registration. As I said at SPSP, there is not one perfect/only way to pre-register – scientists can choose to pre-register only locally (nothing online – just some documentation for themselves), privately (pre-registration plan posted online, but with closed access), or publicly (pre-registration plan posted online, in a registry, and free for all to see). The key ingredient across all of these approaches is that flexibility in analysis and design is constrained by pre-specifying the researcher’s plan (more on that in a bit). For now, let’s consider the options one-by-one.

1. Internal only pre-registration: Within-team (local) documentation of study design, planned hypothesis tests and analyses, planned exclusion rules, and so on, prior to data collection.

Pros: Pre-registration in any form helps you slow down and be more sure that your project can test the question you want it to. I would argue that the quality of science improves as a result. You have protection, even if only to yourself and your team, against over-interpreting an exploratory finding (by decreasing hindsight bias or reducing hypothesizing after the results are known, aka HARKing).

Cons: An editor or reviewer doesn’t have evidence, apart from your word, that the pre-registration actually happened. A scientist’s word is worth a lot, but when it comes to convincing a skeptic, you might have a tough time.

Options: Your imagination is the limit when it comes to thinking of ways to do internal documentation. You could go old-school and write long-hand in ink in a lab notebook. You could use Evernote or Google docs or some other kind of cloud based document storage. The key is that you make your notes to yourself (and perhaps your local team), and those notes don’t get edited later on. They are just a record of your plans. I should note that you would benefit from using a standard type of template (more on templates in a minute), if only so that you don’t forget to think through the most important factors in your study (trust me, forgetting happens to the best of us).

2. Private pre-registration: Same as internal only pre-registration, except you post the pre-registration privately to a repository. Private pre-registrations can be selectively shared with editors and reviewers, for the purposes of proving that a pre-registration occurred as specified.

Pros: You cannot be “scooped” – meaning your ideas stay private until such time as you later choose, but you can definitively prove that your (perhaps un-Orthodox) analysis was the plan all along.

Cons: You cannot attract collaborators, either. Others working in a similar area don’t know what you’re up to, and you might miss out on a valuable collaboration. For the field writ large, this isn’t a very attractive long term option, because we don’t get a record of abandoned projects either – studies that for whatever reason don’t make it past the data collection stage and into the published literature.

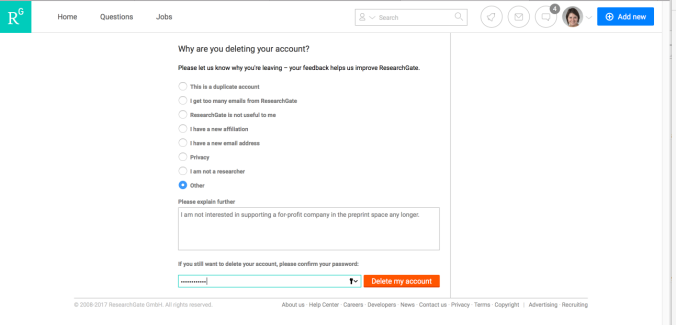

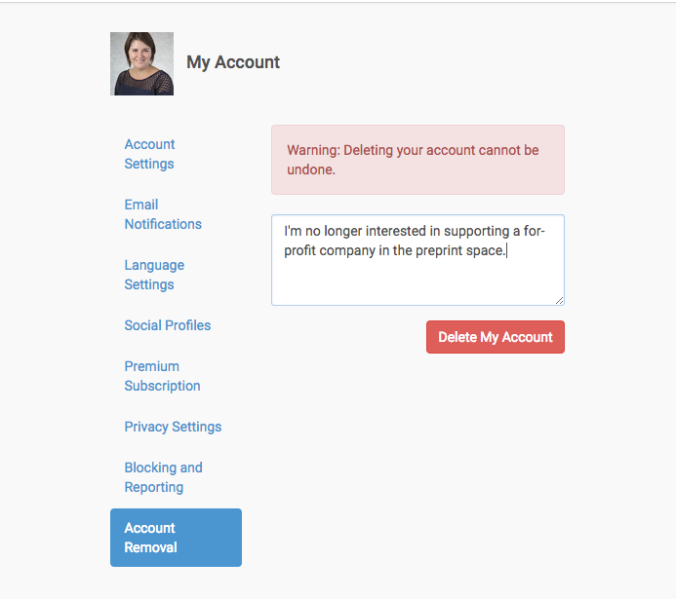

Options: For easy to do private pre-registration, you can’t beat aspredicted.org. One author on the team simply answers 9 questions about the planned project, and a .pdf of the pre-registration is generated. Pre-registrations can stay private indefinitely on aspredicted, but authors do have the option to generate a web link to share with editors/reviewers. Another option would be to use the Open Science Framework (osf.io). The OSF has a pre-registration function that researchers can choose to make private for up to 4 years (at which point, the pre-registration does become public). The pre-registration function freezes the content of an OSF project so that a record of the project is preserved and no longer able to be edited. As an alternative to the pre-registration function, OSF timestamps all researcher activity on the site, and it allows researchers to keep their (non-registered) projects private indefinitely. This means that a researcher could post a document containing a pre-registration to their private project and use the OSF timestamping system to prove to an outside party when the pre-registration occurred, relative to when data were collected. The clunkiness of this system means that researchers who want to have indefinitely private pre-registrations will likely want to use aspredicted.org, or use OSF and accept that after the researcher-determined embargo period of up to 4 years, their pre-registrations will become public. Again, the public vs. private distinction has downstream consequences for the field, because public pre-registrations allow researchers to understand the magnitude of the file drawer problem in a given area of the literature.

3. Public pre-registration: Same as private pre-registration, except that researchers post their plans publicly on the web.

Pros: Fully open, complete with mega-credibility points. Your work is fully verifiable to an outside party. Outside parties can contact you and ask to collaborate. As a side note, we all have projects that are interesting and potentially fruitful, but that get left by the wayside due to lack of time or other constraints. To me, pre-registration (or really any form of transparent documentation) is a way of keeping track of these projects and letting others pick them up as the years go on (I have this fantasy that when a student joins my lab, I’ll be able to direct them to the documentation of an in-progress, but stalled, project, and they’ll just pick it right back up where the previous student faltered). So there are potential benefits of increased transparency and better record keeping beyond the type-I error control that proponents of pre-registration are so quick to note.

Cons: Scooping? I’m not sure this is a real concern, but insofar as people have anxiety about it, it needs to be addressed. If you make your whole train of logic/program of research fully transparent, there is always the risk that someone better/smarter/faster/stronger than you will swoop in and run off with the idea. To me, the potential for fruitful collaborations far outweighs the risk of scooping, and actually both are trumped by a third possibility, which is that all this documentation won’t attract much attention at all. In my own experience, a handful of people are interested, but mostly my work goes on as usual. Others have noted that public pre-registration actually could help you stake a claim on a project, insofar as you are able to demonstrate the temporal precedence of the idea relative to the alleged scoop-er. A final con is that there is a time cost to getting the study materials up to snuff for public consumption. However, as I noted before, the quality of the work likely increases, and the project is less likely to get shelved if a collaborator loses interest or there are other hiccups down the road. I’m a big fan of designing studies so that they are informative, null results or not, so that there is (ideally) no such thing as a “failed” study, and instead only limitations in our time, motivation, and fiscal resources to publish every (properly executed) study. Doing a good job of documentation on the front end of a project means that even if you never get around to publishing a boring/null/whatever result, a future meta-analyst could, with some ease, find your project and incorporate it into their work.

Options: The OSF is likely to be your best bet at this point, and although OSF is a powerful, flexible system, it is not the most user friendly for beginners. However, the opportunity cost of learning the system more than pays for itself down the road. Anna van’t Veer and Roger Giner-Sorolla have this nice step-by-step that explains how to create and pre-register a new project on OSF. The Center for Open Science pre-registration challenge also has a bunch of materials that will help you get started. And if you want to do the pre-registration challenge, and you’re an R user, you’ll definitely want to check out Frederick Aust’s prereg package for R.

Regardless of which option you choose to pursue, I would encourage you to think about using a template (either make your own or use someone else’s) so that you get all of the most important details of your project ironed out ahead of time. It will definitely happen that once you have your data in hand, you realize that you’ve forgotten to specify something important. That’s OK, and you ought to just honestly report such discrepancies and move on. Don’t let perfect be the enemy of done.

Templates:

- Alison Ledgerwood’s internal pre-reg template

- Sample aspredicted.org pre-reg form

- Sample pre-reg challenge form (from Aust’s prereg R package)

Feedback, comments, and questions welcome! Leave a note on the post, write me on Twitter (@katiecorker), or shoot me an email (corkerk at kenyon dot edu).